| Managed By | Land Transport Authority |

|---|---|

| Last Updated | February 13, 2018, 14:49 (SGT) |

| Created | April 8, 2016, 15:55 (SGT) |

| Coverage | From March 1, 2016 |

| Frequency | Real-time |

| Source(s) | Land Transport Authority |

| Licence | Singapore Open Data Licence |

Chapter 1 API connection

1.1

About this dataset

Get the latest images from traffic cameras all around Singapore. It returns links to images of live traffic conditions along expressways and Woodlands & Tuas Checkpoints.

- Retrieved every 20 seconds from LTA's Datamall

- Locations of the cameras are also provided in the response

- We recommend calling this endpoint every 3 minutes

The request URL to the API is the following:

https://api.data.gov.sg/v1/transport/traffic-images

You can simply copy and paste the link in your preferred browser to see te JSON:API.

Curl

$ curl -X GET "https://api.data.gov.sg/v1/transport/traffic-images" -H "accept: application/json"1.2 API example value

{

"api_info": {

"status": "healthy"

},

"items": [

{

"timestamp": "2021-02-01T20:34:23.451Z",

"cameras": [

{

"timestamp": "2021-02-01T20:34:23.451Z",

"camera_id": 0,

"image": "string",

"image_metadata": {

"height": 0,

"width": 0,

"md5": "string"

}

}

]

}

]

}

The retrieving parameters are:

timestamp, camera_id, image Note, the timestamp format:

YYYY-MM-DD[T]HH:mm:ss (SGT)

1.3 Making an API request

First, we have to import the correspondent libraries to work with GET requests, dataframes, datetime objects, etc.

import requests

import datetime

import dropbox

import os

import pandas as pdIt its worthy to mention that we must have installed these libraries in our system. pip3 install

'library' might be useful.

Now we can make a request to the url as follows:

endpoint = "https://api.data.gov.sg/v1/transport/traffic-images"

#response from API URL

data = requests.get(endpoint).json()

#Prints all the json data

print(data.get('items'))Once we have explored the API, we have to extract the desired parameters from json. At this point we have

to select a camera_id to get the url's of the images. We recommend to choose a camera which has more traffic

flow. (i.e 1701) to get the url's of the images. In addition, we must define initial and final timestamp variables.

This allow us to retrieve captured images within a time window (feel free to try different parameter values).

Also, a delta variable referring to the time-window (in minutes) between each photo.

To this end, we can create a function getURLS(date_format): which will depend a list that store

our delta-timestamp strings

def getURLS(date_format):

global times

#set a specific camera_id

req_camera_id = "1701"

#auxiliary variables

image=[]

time =[]

for i in range(len(date_format)):

#Adjust timestamps to correct url format

url_date = date_format[i].replace('/','-')

endpoint = "https://api.data.gov.sg/v1/transport/traffic-images?date_time="+url_date

data = requests.get(endpoint)

if (data):

#if response status 200

data = requests.get(endpoint).json()

for item in data['items']:

for cam in item['cameras']:

camera_id = cam['camera_id']

if camera_id == req_camera_id:

image = cam['image']

wanted_cam.append(camera_id)

wanted_url.append(image)

time.append(cam['timestamp'])

print(times[i])

df = pd.DataFrame(list(zip(wanted_cam, wanted_url,times)),

columns =['Cam_id', 'Image','Timestamp_sg_time'])

df.set_index('Cam_id', inplace=True)

df.to_csv('/your/path/Wed-1-20-20.csv', sep=',', encoding='utf-8')

if __name__ == "__main__":

string_date_list = []

#Generate timestamps every N minutes. Parameters can be modified

#parameter order as follows : datetime(year. month, day, hours, min)

initial_time = datetime.datetime(2020, 1, 20, 0, 0)

final_time = datetime.datetime(2020, 1, 21, 0, 0)

delta = datetime.timedelta(minutes=60)

times = []

wanted_url=[]

wanted_cam=[]

while initial_time < final_time:

times.append(initial_time)

initial_time += delta

for i in range(len(times)): #Convert datetime objects to string list

string_date_list.append((times[i].strftime('%Y/%m/%dT%H:%M:%S')))

getURLS(string_date_list)

Let's review what exactly the code does:

The following for loop iterates over the length of the list string_date_list

which basically stores each timestamp according to delta variable. This is, every

timestamp corresponds to one json file, thus the loop iterates over the keys and objects of each json

for i in range(len(date_format)):

#Adjust timestamps to correct url format

url_date = date_format[i].replace('/','-')

endpoint = "https://api.data.gov.sg/v1/transport/traffic-images?date_time="+url_date

data = requests.get(endpoint)

if (data):

#if response status 200

data = requests.get(endpoint).json()

for item in data['items']:

for cam in item['cameras']:

camera_id = cam['camera_id']

if camera_id == req_camera_id:

image = cam['image']

wanted_cam.append(camera_id)

wanted_url.append(image)

time.append(cam['timestamp'])

print(times[i])The next lines of the code save the three lists (camera_id,

image and timestamp) we will use as dataframe. Each list has camera_id,

image and timestamp parameters of the json. We have added the column names (headers)

so that we can manipulate the data easier..

df = pd.DataFrame(list(zip(wanted_cam, wanted_url,times)),

columns =['Cam_id', 'Image','Timestamp_sg_time'])

df.set_index('Cam_id', inplace=True)

df.to_csv('/your/path/Wed-1-20-20.csv', sep=',', encoding='utf-8')Now let's take a look at the main function. Here we have our initial_time,

final_time and delta variables. Since our API retrieve images every 20 secs, some timestamps won't be very

precise as it will return images that are closest to the specified point in time.

The while(): loop appends the timestamps according to the deltavariable (set to 60 minutes,

hence, equals to 24 photos) to our times list (Object list). Finally, we convert the date-time objects to strings string_date_list

so we can call our function that receive a list as a parameter.

if __name__ == "__main__":

req_camera_id = '1701' #Filtrate by specific camera

string_date_list = []

#Generate timestamps every delta minutes, initial_time, final_time and

#delta parameters can be modified

initial_time = datetime.datetime(2020, 1, 20, 0, 0)

final_time = datetime.datetime(2020, 1, 21, 0, 0)

delta = datetime.timedelta(minutes=60)

times = [] #list of timestamps

wanted_url=[] #list of image's URL

wanted_cam=[] #list of camera_ids

while initial_time < final_time:

times.append(initial_time)

initial_time += delta

#Convert datetime objects to string list

for i in range(len(times)):

#We place the "[T] to the timestamp format YYYY-MM-DD[T]HH:mm:ss "

string_date_list.append((times[i].strftime('%Y/%m/%dT%H:%M:%S')))

getURLS(string_date_list)

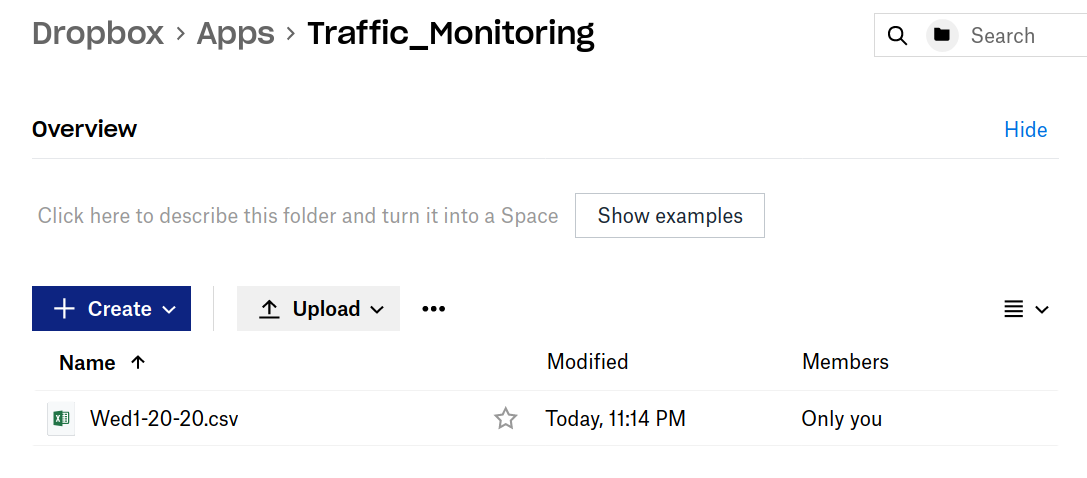

1.4 Upload files to Dropbox

As the next chapter involves Google Colab, we must work in the cloud. To this end, we can create a function to upload our files to the cloud using a personal token. More precisely we'll use Dropbox.

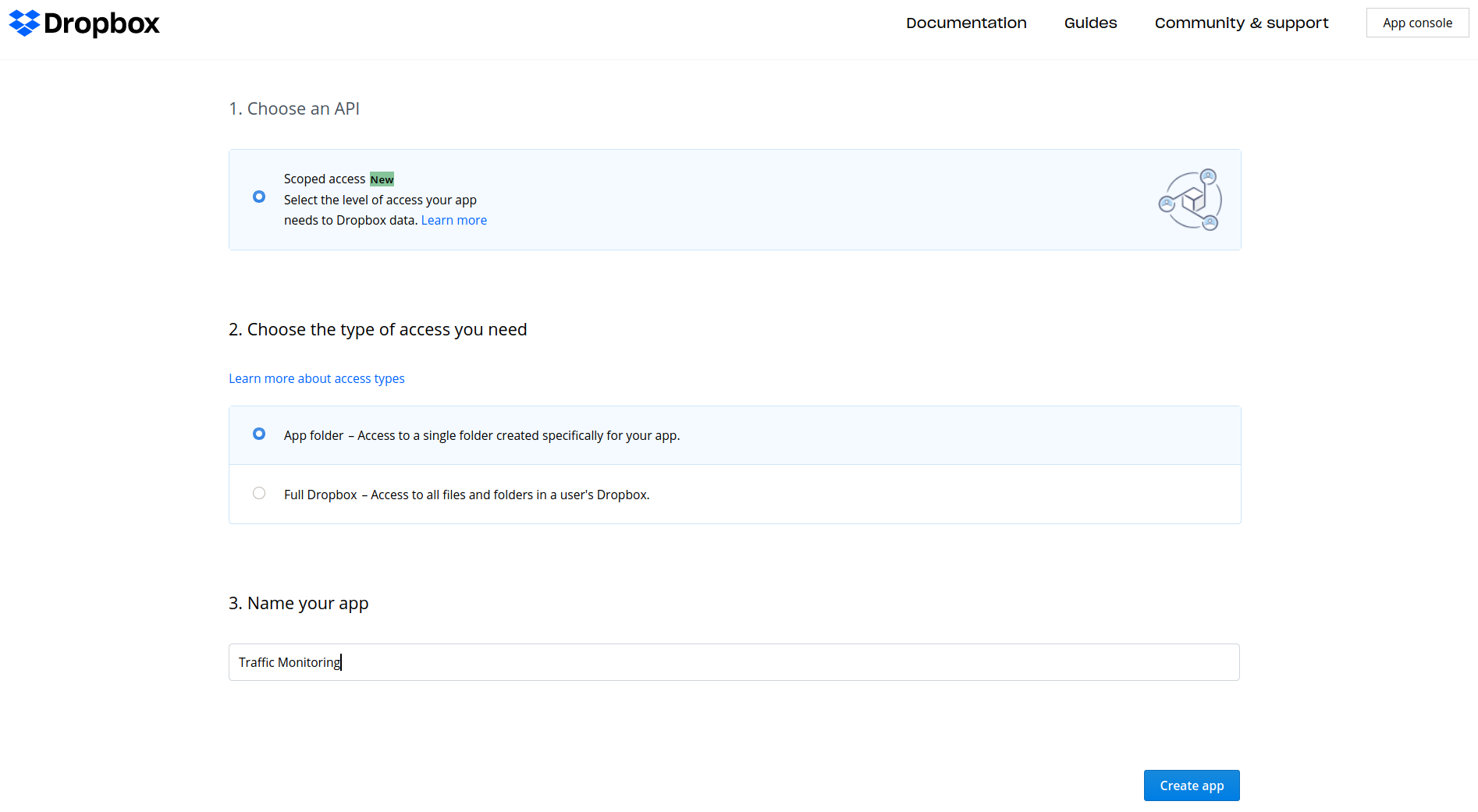

Login to your Dropbox account, if you don't have one register an account and create an App in Developer apps.

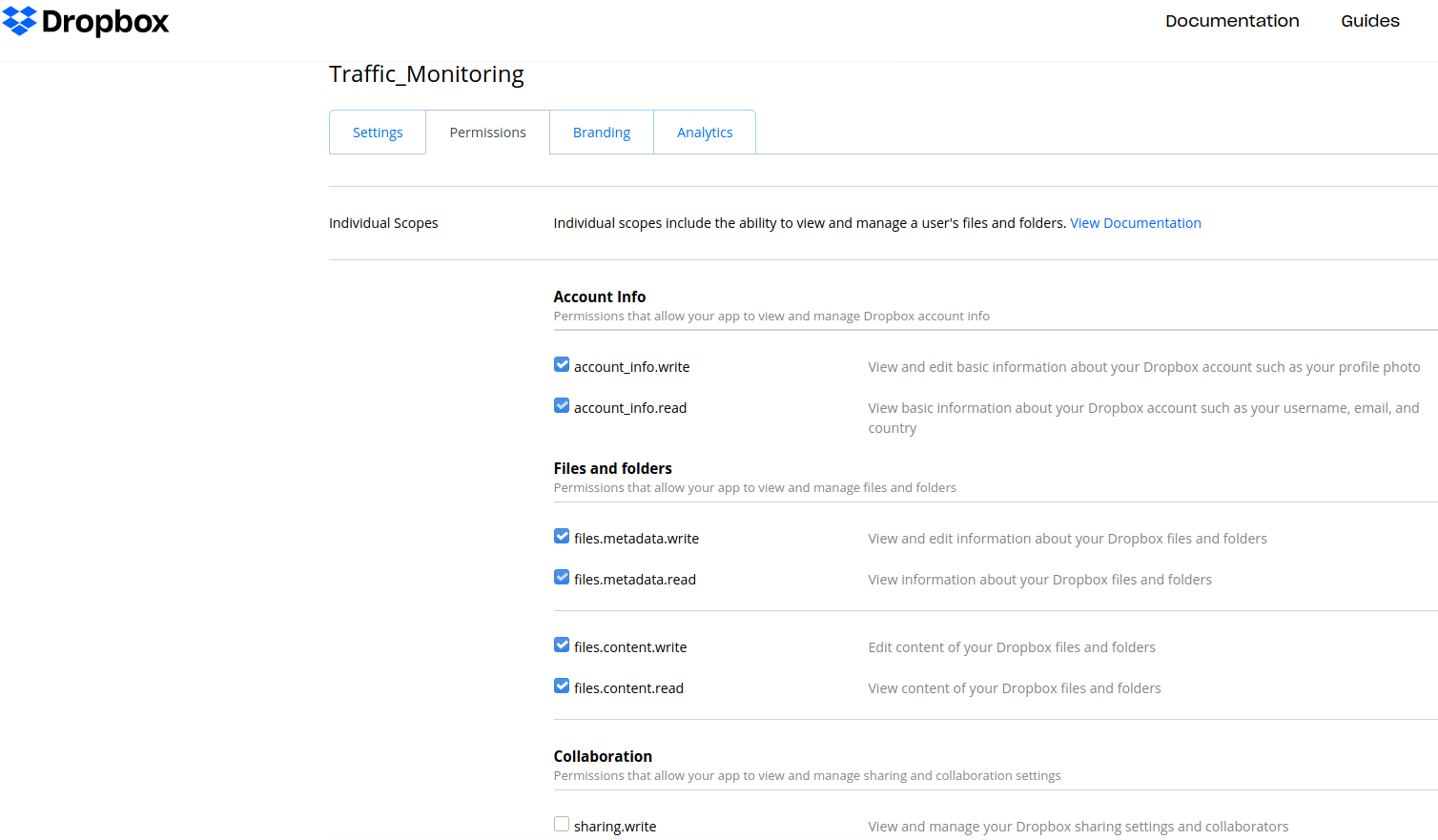

Set the permissions to read and write on the App. The token need the particular scope required

by the route in order to access the route. Otherwise, the Dropbox API will throw a TokenScopeError.

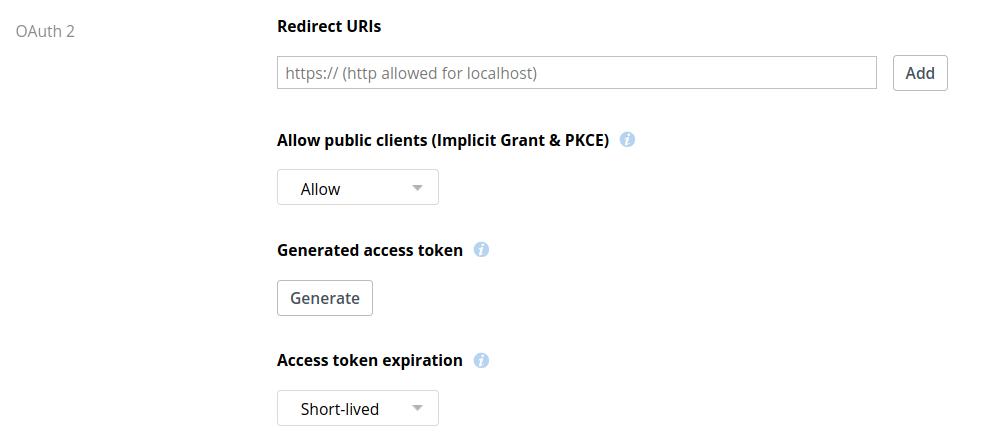

On the left side we note OAuth 2 part. Here we click on "Generate" button to create an access token.

We copy and paste our token as a string in dbx variable.

Note: the token last 4 hours if you don't modify Access token expiration.

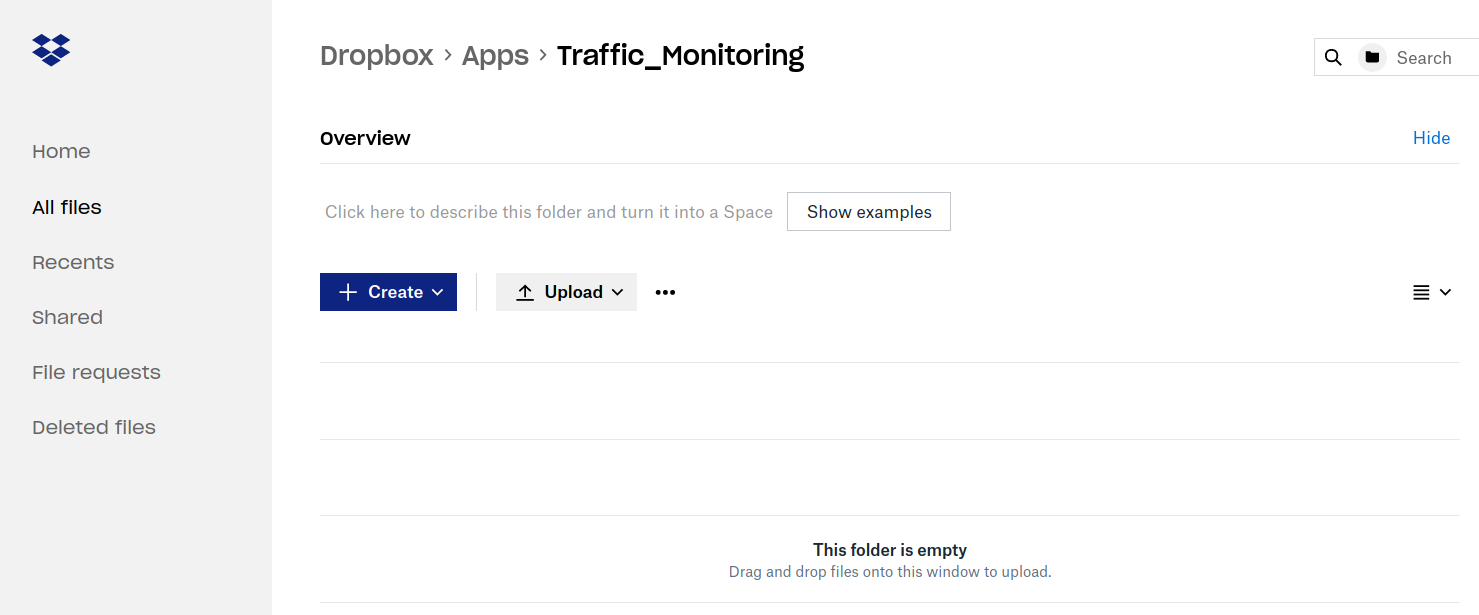

Then, we login to our Dropbox account. The application has been now created in our Apps directory

Here's the function that allows us to send our file to Dropbox. You can also do this manually, but once configurated the correct paths, the script will load it automatically.

def uploadToDropbox():

#Generate your access token with OAuth guide from dropbox developers documentation

dbx = dropbox.Dropbox('Put your token here')

#Local path were you have stored the csv file

rootdir = '/your/path/'

print ("Attempting to upload...")

# walk return first the current folder that it walk, then tuples

#of dirs and files not "subdir, dirs, files"

for dir, dirs, files in os.walk(rootdir):

for file in files:

try:

file_path = os.path.join(dir, file)

dest_path = os.path.join('/', file) #root folder of dropbox app

print('Uploading %s to %s' % (file_path, dest_path))

with open(file_path, "br") as f:

dbx.files_upload(f.read(), dest_path, mute=True)

print("Finished upload.")

except Exception as err:

print("Failed to upload %s\n%s" % (file, err))We can simply call uploadToDropbox()

function in our main function below getURLS(string_date_list)line.

You can download the complete script here and run it in you preferred IDE.

Once we run the script, the file Wed1-20-20.csv will be downloaded in your path and into your Dropbox App.

An output of the code look like this:

2020-01-20 00:00:00 2020-01-20 01:00:00 2020-01-20 02:00:00 2020-01-20 03:00:00 2020-01-20 04:00:00 2020-01-20 05:00:00 2020-01-20 06:00:00 2020-01-20 07:00:00 2020-01-20 09:00:00 2020-01-20 09:00:00 2020-01-20 10:00:00 2020-01-20 11:00:00 2020-01-20 12:00:00 2020-01-20 13:00:00 2020-01-20 14:00:00 2020-01-20 15:00:00 2020-01-20 16:00:00 2020-01-20 17:00:00 2020-01-20 18:00:00 2020-01-20 19:00:00 2020-01-20 20:00:00 2020-01-20 21:00:00 2020-01-20 22:00:00 2020-01-20 23:00:00 Attempting to upload... Uploading /home/Wed1-20-20.csv to/Wed1-20-20.csv Finished upload.

Finally, the csv file should look like this: